Deep learning in land care research

The Problem:

Teaching artificial intelligence (AI) to identify complex, variable shapes without using exhaustive computing power.The Solution:

Dr Brent Martin is looking at ways to use Mahuika as a centralised platform for training and testing an existing automated spatial imaging pipeline as well as test and develop other deep learning experiments.The Outcome:

Higher accuracy in image recognition that can potentially discover hidden patterns in imagery that a human user wouldn’t recognise. These hidden relationships might then shed light on valuable new information in a range of fields, from land cover mapping to landslide detection, and in various image classification domains, from detecting predator species in camera trap images to identifying species of beech pollen from microscope slides.

Image recognition is one of the enduring problems in deep learning systems. It is prone to inaccuracies and needs large computing resources to train.

Despite these challenges, deep learning has proven to offer state-of-the-art performance, not only in classifying images but also detecting objects and semantically segmenting images.

Dr Brent Martin is a deep learning expert at Manaaki Whenua - Landcare Research. He and others are building an automated spatial imaging pipeline that will allow researchers to apply deep learning to remote sensing imagery, with applications in land use/cover mapping and other domains, as well as making it easier to experiment with new deep learning approaches.

“We’re looking to be able to map land use and cover on a weekly basis to see what changes with the seasons, and to detect and map ecosystems such as ephemeral wetlands that come and go. This approach gives you that ability to do time series and high-resolution image analysis,” Brent said.

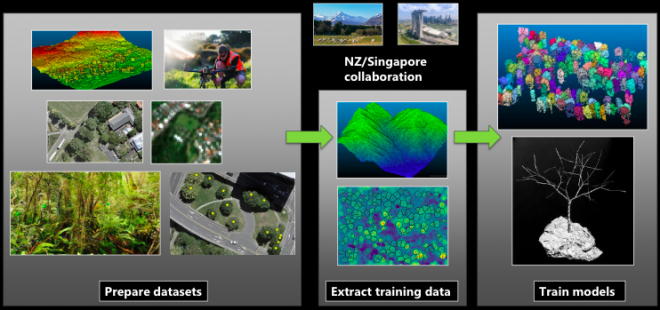

One example of Manaaki Whenua’s current research is a collaborative project (led by Jan Schindler) across various New Zealand and Singapore research institutes and universities, that uses light detection and ranging (LiDAR) point clouds to accurately map tree crowns in 3D. It presents a deceptively tricky problem: teaching AI to identify complex, variable shapes without using exhaustive computing power.

“We're looking at a few different techniques, but one of them is looking at the top-down 2D view, and whether you can accurately extract individual trees from that image, and then use it to segment the LiDAR point cloud” Brent said. “Our first project output would be showing we can do a tree crown map that accurately segments and identifies the species of every tree in the Wellington urban region.”

Plant morphology is a uniquely challenging area of deep learning application. AI image recognition stumbles at grouping morphologically diverse subjects. While a human might be able to quickly group trees by their similarities, an AI’s classification attempt can falter on such variable classes.

This is a problem even for single plant images. This project involves mapping swathes of land, often with tight tree clumps that further confuse AI classification. Using NeSI’s Mahuika platform, Brent could quickly train the pipeline using Manaaki Whenua map data and he was able to run multiple scenarios at once to test different approaches and parameters.

“At the moment, Manaaki Whenua uses a number of semi-manual pipelines for tasks such as land cover and land use mapping. It does include some sophisticated image analysis techniques, but it isn't using some of the more cutting-edge techniques like deep learning. We're looking at how we can further automate those processes to make them more efficient so they can be run more frequently, for example to keep up with the rate that satellite imagery is now being produced.”

While the project’s initial intent was to build a pipeline for automated tree crown extraction, the pipeline is being developed to be very general, so its applications for image recognition could be used in a wide range of mapping tools. The pipeline and associated training material will be available to all researchers at Manaaki Whenua, and Brent would ideally like to see it used and further developed by other researchers who use NeSI.

“NeSI has given us an excellent centralised platform to run this on. My intent is to put up a sandpit project on there for people doing this kind of research. They can get a NeSI account and put their images on there and do experiments with our pipeline,” Brent said.

The project is currently at the stage of training networks for various domains, including tree crown segmentation, tree species detection, land cover mapping, landslide detection and various image classification domains from detecting predator species in camera trap images to identifying species of beech pollen from microscope slides.

Brent is also undertaking more fundamental research into deep learning, including exploring methods for producing accurate 2D visualisations of what the networks are “looking at”, and optimising network architectures to reduce their size, which would significantly cut down computing resources.

Training accurate 2D image recognition using smaller networks could open up other fields and allow small-scale automation technologies capable of being run on a modest GPU setup including on devices such as drones, monitoring stations and traps.

“I’ve got my own GPU workstation at home that I work on. Often, maintaining CUDA libraries and other tools for open-source projects is really tricky when you’re porting between machines and across framework versions. NeSI has made it a breeze to get these projects ported in and running. It’s well-set-up with its tools and modules,” Brent said.

In the project pipeline, the deep learning network automatically extracts image features from the training data, rather than users crafting features. This leads to higher accuracy in image recognition for much less human effort, and can potentially discover hidden patterns in the imagery that a human user wouldn’t recognise. These hidden relationships might give new information on mapping over timescales of New Zealand’s land cover.

“It’s not just for mapping per se. We can potentially use deep learning in areas like mapping soil moisture on farms from a variety of spatial inputs and seeing that change on a day-to-day basis. It would give you management data like never before.”

Brent presented a talk with Aleksandra Pawlik, eResearch Capability Specialist at Manaaki Whenua, at NeSI’s eResearch NZ 2021 conference. The session, Starting an eResearch revolution with deep learning, was as an exploration of the benefits and pitfalls of different deep learning approaches and their applications in this project.

“I was really impressed by the breadth of knowledge and the enthusiasm of all the NeSI team who were present there. I think that they do a really good job of kind of nurturing the New Zealand research ecosystem.”

The presentation covered object classification to 95% accuracy, visualising the behaviour of the otherwise “black box” methodology, and training networks using NeSI infrastructure. It highlighted NeSI’s huge benefit to deep learning image recognition, for example shortening the imaging test database MNIST training time from eight hours on a CPU to 2.5 minutes on a single GPU using NeSI’s Mahuika system. LiDAR pre-processing also benefits greatly from being able to be parallelised across Mahuika’s thousands of CPU nodes.

While there are still challenges to overcome in deep learning image recognition, Brent’s research and NeSI's HPC platform are helping provide a valuable sandbox to test approaches, while building powerful new automation tools for Manaaki Whenua’s map data.

Do you have an example of how NeSI support or platforms have supported your work? We’re always looking for projects to feature as a case study. Get in touch by emailing support@nesi.org.nz.