Examining the Earth’s electro-magnetic field in 3D

Non-invasive earth sciences research enabled with clever programming and powerful hardware. With support from NeSI staff, a researcher at the University of Auckland has been able to make use of the message passing interface and GPU programming to enable work across compute nodes and hardware devices.

John Rugis is a member of the University of Auckland's Institute of Earth Science and Engineering. In the earth sciences, a number of different electro-magnetic techniques are used to non-invasively acquire information about underground material properties and objects. One of these techniques, magnetotellurics, takes advantage of the naturally occurring time varying electromagnetic field that surrounds the earth.

The lowest frequency components of this field penetrate through the surface of the earth to a depth of up to tens of kilometres. Differing subsurface materials reflect this field and careful measurements of these reflections can be used to create 3D images of the subsurface through a computational process known as inversion.

The goal in this project was to explore the possibility of speeding-up the most time consuming step of the inversion process – the forward modelling. To achieve this goal, we selected combined CPUs across multiple nodes via the message passing interface (MPI) and NVIDIA GPUs.

How a supercomputer is constructed

High performance computing clusters are made up of multiple nodes. Each node is a physical box containing multiple CPU chips, e.g. integrated circuits, local disk drives and memory. Each CPU in turn contains multiple cores, each of which can process one thread of execution. Getting the CPUs working together on a common task requires coordination and communication.

Different nodes within each cluster are often given different names according to their role. On the NeSI Pan cluster, there are compute nodes, GPU nodes and high-memory nodes. The specialised parallel file system that supports each of NeSI's cluster also requires its own nodes to support I/O.

Making use of multiple nodes and multiple devices

Simultaneous utilisation of multiple compute nodes requires careful problem decomposition and parallel programming techniques.

Problem decomposition is the process of splitting up a problem into smaller components. In the general case, this involves splitting the problem into a grid and allocating a CPU to each block. At the end of each step in a simulation, CPUs talk to their neighbours to let them know what happened. This decomposition process allows multiple parts of large, complex systems to be processed in parallel.

Parallel programming calls on the use of MPI, OpenMP, or some other technique, to pass information between each processor at key times in the processing sequence. Problem decomposition is not the end of the story, information must be shared to keep the individual components on track. However, the time spent sending messages reduces the performance gain of parallelisation. Getting the balance right between parallel decomposition and message passing overhead is challenging.

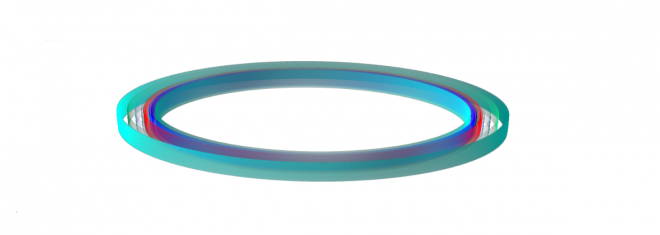

Reference models that run in serial are used to confirm the correctness of parallel programming implementations. John Rugis spent a lot of time comparing the results of the reference model against those produced by his subsequent parallelisation efforts. Figure 1 shows a reference visualisation of simulated electric field in a 100 × 100 × 100 model space after 120 time steps. The results after parallelisation and scaling-up were a perfect match!

Encouraging results

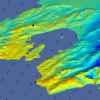

Electromagnetic theory says that over time, concentric rings should appear. In order to validate this, the final model size in this project was increased to 848 × 848 × 848. A slice of the electric field after 550 steps is shown in Figure 2. What we see are the concentric rings forming - which is exactly what we expected from the theory.

Figure 1: Electric field model in reference model

Figure 2: Electric field slice in final model

Using GPU nodes on the NeSI Pan cluster

Using eight GPU nodes on the Pan cluster at the University of Auckland, John's team were able to achieve an 80-fold overall speedup in forward modelling run time. He explains what this means:

"With this success, we are on-track to reduce typical 3D magnetotelluric inversion run times from over a week to less than a day.

"The NVIDIA CUDA software development tools on NeSI's Pan were used extensively for coding, debugging and profiling. OpenMPI libraries were used for inter-node communication. We could not have even considered this work without access to specific high-performance computing facilities such as those available at the University of Auckland. The support and technical assistance from the staff at the Centre for eResearch of the University of Auckland was instrumental in our success.

"Parallel computing is central in our research leading to more robust and efficient methods. Our parallel computing success has helped us to meet and exceed client expectations in our commercial work.

"We plan to further optimise our techniques and to continue scaling up to use additional GPU nodes as they become available.

Staff based at the Centre for eResearch are available to support all researchers via NeSI's Computational Science Team.

Further Reading

- For those interested in understanding more about CUDA, take a look at NVIDIA's CUDA Training webpage for developers, which includes interactive training material, presentation notes and recordings.

- John presented at eResearch 2013's New Zealand HPC Applications Workshop. His slides are available via eresearch.org.nz. As well as presenting his science, he provides CUDA source code, profiling tips and visualisation examples.

- John has a strong research background in scientific 3D visualisation, with a particular interest in visualising geophysical processes. You can see many examples of his work on his webpage.

- Members of the Computational Science Team are at hand if you would like to discuss applying GPU programming to your problem domain.

Acknowledgement

This case study is based on the original, produced by the Centre for eResearch at the University of Auckland.